For a long time, I've been using a texture cache I coded for my own builds, that hasn't been uploaded to the CVS yet (neither I know it will be) as it needs a cleanup and uses some C++ only stuff which would be time consuming to port.

The main benefit you get from using a cache is uploading to openGL as little as possible per frame. In fact, it's rather simple: whenever a texture is going to be uploaded, you get some sort of magic number from the texture (I currently use some type of CRC) to be used as an identifier, if it's already in the cache you just enable it, if not, it's just a matter of uploading it to openGL and then adding it to the cache for further use.

In my current implementation I lack one important feature that wouldn't be hard to add: flushing unused textures. Dynamic textures or textures not longer used (from the previous level, menu or else) remain in the cache and, more important, in graphic cards memory. Right now is not that much of a problem, but I'm sure it would be after a few hours of gameplay.

I also toyed a bit with checking directly the palette texture formats prior to conversion, so I can save some precious cycles, but it's lacking serious testing: with paletted formats you've way less data, and CRC's are likely to collide easily, thus giving false matches while checking if cached, and glitching rendering. Anyway, seems like something important, as it can save quite a lot of CPU time.

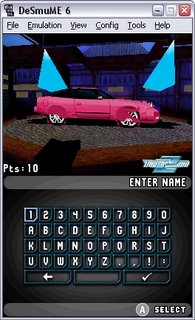

Today's screenshot is based on some optimizations on the CRC creation, as some profiling showed it was taking too much time to compute. I changed a bit the way it works (and expect it to work as good as in the past :P) so I could get a bit more of performance. Along with some optimizations here and there, that's what I got:

The main benefit you get from using a cache is uploading to openGL as little as possible per frame. In fact, it's rather simple: whenever a texture is going to be uploaded, you get some sort of magic number from the texture (I currently use some type of CRC) to be used as an identifier, if it's already in the cache you just enable it, if not, it's just a matter of uploading it to openGL and then adding it to the cache for further use.

In my current implementation I lack one important feature that wouldn't be hard to add: flushing unused textures. Dynamic textures or textures not longer used (from the previous level, menu or else) remain in the cache and, more important, in graphic cards memory. Right now is not that much of a problem, but I'm sure it would be after a few hours of gameplay.

I also toyed a bit with checking directly the palette texture formats prior to conversion, so I can save some precious cycles, but it's lacking serious testing: with paletted formats you've way less data, and CRC's are likely to collide easily, thus giving false matches while checking if cached, and glitching rendering. Anyway, seems like something important, as it can save quite a lot of CPU time.

Today's screenshot is based on some optimizations on the CRC creation, as some profiling showed it was taking too much time to compute. I changed a bit the way it works (and expect it to work as good as in the past :P) so I could get a bit more of performance. Along with some optimizations here and there, that's what I got:

That's running on the same configuration as the previous screenshots, a Northwood Pentium4 at 2.6ghz with a Geforce FX5600. I expect to get a bit more of speed in the future, but I'm not sure how much, as I've been unable to work on desmume in the 5-6 days. Oh, and the emulator menu is different from previous screenshots, as I'm using a build I use to develop stuff and then merge into the CVS: I never cared to change the menus from the base source code yopyop released.